Under Linux, there are a number of related features around marking areas of a file, filesystem, or block device as “no longer allocated”. In the standard view, here’s what happens if you fill a file to 500M and then truncate it to 100M, using the “truncate” syscall:

- create the empty file, filesystem allocates an inode, writes accounting details to block device.

- write data to file, filesystem allocates and fills data blocks, writes blocks to block device.

- truncate the file to a smaller size, filesystem updates accounting details and releases blocks, writes accounting details to block device.

The important thing to note here is that in step 3 the block device has no idea about the released data blocks. The original contents of the file are actually still on the device. (And to a certain extent is why programs like shred exist.) While the recoverability of such released data is a whole other issue, the main problem about this lack of information for the block device is that some devices (like SSDs) could use this information to their benefit to help with extending their life, etc. To support this, the “TRIM” set of commands were created so that a block device could be informed when blocks were released. Under Linux, this is handled by the block device driver, and what the filesystem can pass down is “discard” intent, which is translated into the needed TRIM commands.

So now, when discard notification is enabled for a filesystem (e.g. mount option “discard” for ext4), the earlier example looks like this:

- create the empty file, filesystem allocates an inode, writes accounting details to block device.

- write data to file, filesystem allocates and fills data blocks, writes blocks to block device.

- truncate the file to a smaller size, filesystem updates accounting details and releases blocks, writes accounting details and sends discard intent to block device.

While SSDs can use discard to do fancy SSD things, there’s another great use for discard, which is to restore sparseness to files. Normally, if you create a sparse file (open, seek to size, close), there was no way, after writing data to this file, to “punch a hole” back into it. The best that could be done was to just write zeros over the area, but that took up filesystem space. So, the ability to punch holes in files was added via the FALLOC_FL_PUNCH_HOLE option of fallocate. And when discard was enabled for a filesystem, these punched holes would get passed down to the block device as well.

Take, for example, a qemu/KVM VM running on a disk image that was built from a sparse file. While inside the VM instance, the disk appears to be 10G. Externally, it might only have actually allocated 600M, since those are the only blocks that had been allocated so far. In the instance, if you wrote 8G worth of temporary data, and then deleted it, the underlying sparse file would have ballooned by 8G and stayed ballooned. With discard and hole punching, it’s now possible for the filesystem in the VM to issue discards to the block driver, and then qemu could issue hole-punching requests to the sparse file backing the image, and all of that 8G would get freed again. The only down side is that each layer needs to correctly translate the requests into what the next layer needs.

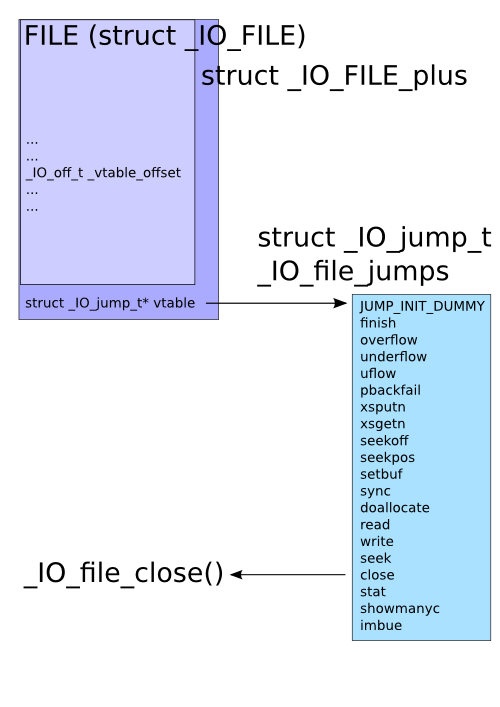

With Linux 3.1, dm-crypt supports passing discards from the filesystem above down to the block device under it (though this has cryptographic risks, so it is disabled by default). With Linux 3.2, the loopback block driver supports receiving discards and passing them down as hole-punches. That means that a stack like this works now: ext4, on dm-crypt, on loopback of a sparse file, on ext4, on SSD. If a file is deleted at the top, it’ll pass all the way down, discarding allocated blocks all the way to the SSD:

Set up a sparse backing file, loopback mount it, and create a dm-crypt device (with “allow_discards”) on it:

# cd /root

# truncate -s10G test.block

# ls -lk test.block

-rw-r--r-- 1 root root 10485760 Feb 15 12:36 test.block

# du -sk test.block

0 test.block

# DEV=$(losetup -f --show /root/test.block)

# echo $DEV

/dev/loop0

# SIZE=$(blockdev --getsz $DEV)

# echo $SIZE

20971520

# KEY=$(echo -n "my secret passphrase" | sha256sum | awk '{print $1}')

# echo $KEY

a7e845b0854294da9aa743b807cb67b19647c1195ea8120369f3d12c70468f29

# dmsetup create testenc --table "0 $SIZE crypt aes-cbc-essiv:sha256 $KEY 0 $DEV 0 1 allow_discards"

Now build an ext4 filesystem on it. This enables discard during mkfs, and disables lazy initialization so we can see the final size of the used space on the backing file without waiting for the background initialization at mount-time to finish, and mount it with the “discard” option:

# mkfs.ext4 -E discard,lazy_itable_init=0,lazy_journal_init=0 /dev/mapper/testenc

mke2fs 1.42-WIP (16-Oct-2011)

Discarding device blocks: done

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

655360 inodes, 2621440 blocks

131072 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=2684354560

80 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

# mount -o discard /dev/mapper/testenc /mnt

# sync; du -sk test.block

297708 test.block

Now, we create a 200M file, examine the backing file allocation, remove it, and compare the results:

# dd if=/dev/zero of=/mnt/blob bs=1M count=200 200+0 records in 200+0 records out 209715200 bytes (210 MB) copied, 9.92789 s, 21.1 MB/s # sync; du -sk test.block 502524 test.block # rm /mnt/blob # sync; du -sk test.block 297720 test.block

Nearly all the space was reclaimed after the file was deleted. Yay!

Note that the Linux tmpfs filesystem does not yet support hole punching, so the exampe above wouldn’t work if you tried it in a tmpfs-backed filesystem (e.g. /tmp on many systems).

© 2012, Kees Cook. This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 License.